SLAM > Documentation

Hardware

Archive format

Dataset format

Monocular data

RGB-D data

RGB stereo data

IMU data

Raw data

Evaluation

Result submission

Hardware

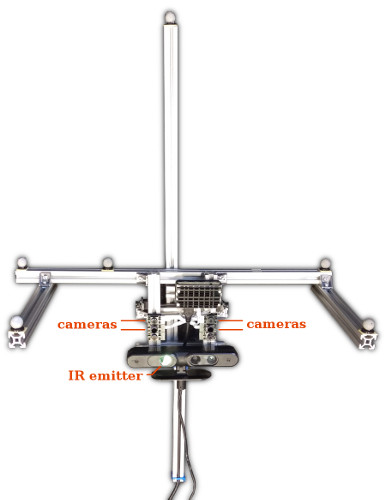

A custom camera rig was used to record the datasets. All cameras on this rig use a global shutter and are synchronized. Depth was estimated with active stereo, i.e., a stereo algorithm was used on two infrared cameras to estimate depth, and an infrared pattern was projected with the IR emitter of an Asus Xtion Live Pro to provide additional texture for stereo matching. The cameras on the Xtion were not used. Next to each infrared camera, we placed an RGB camera (with a Bayer pattern). Depth images are estimated for the viewpoint of the right infrared camera and are then re-projected into the viewpoint of the RGB camera next to it to obtain RGB-D data. Images of the other RGB camera can be used for stereo SLAM. Each of the four cameras in addition has an IMU on it.

Dataset format

The ETH3D SLAM benchmark can be used to evaluate visual-inertial mono, stereo, and RGB-D SLAM. Correspondingly, it is possible to download either all dataset images or only the relevant parts (for example, only monocular images). In the following, the format of the different data types is described.

The frame rate of most datasets is about 27.1 Hz, with the exception of the datasets whose names begin with "sfm_", which are recorded with about 13.6 Hz. This is because we used additional cameras for the latter datasets, which limits the frame rate of the camera system.

Monocular data

Monocular data follows this file system structure:

+── rgb

│ +── <timestamp1>.png

│ +── <timestamp2>.png

│ +── ...

+── calibration.txt

+── groundtruth.txt (for training datasets)

+── rgb.txt

Color images in the rgb folder are stored as PNG files. Please note that the images have been recorded while trying to avoid overexposure (which leaves no information in the overexposed areas). As a result, often many image areas are relatively dark (while however still containing information). In addition, we did not apply white-balancing to the images in order not to disturb the photometric consistency. While both of these decisions might be beneficial for SLAM systems, they are not well-suited for visualization to humans. Therefore, for visualization of the images or of 3D reconstruction results, we recommend to brighten up the colors and apply white balancing as needed.

The file calibration.txt provides the intrinsic calibration of the images, listing the following four parameters in a single line, separated by spaces: $$f_x ~~ f_y ~~ c_x ~~ c_y$$ All images are undistorted and follow the pinhole camera model, which means that a 3D point \((x, y, z)^T\) in the local coordinate system of a camera (with \(z > 0\)) can be projected into the image as follows: $$u = f_x \frac{x}{z} + c_x$$ $$v = f_y \frac{y}{z} + c_y$$ The convention for the pixel coordinate origin of the result is that (0, 0) is the center of the top-leftmost pixel (as opposed to the top-left corner of the image).

For training datasets, the ground-truth camera trajectory is given in the file groundtruth.txt. Each line in this file gives one pose measurement in the following format:

timestamp tx ty tz qx qy qz qw

Each line starts with the timestamp in seconds. Then the camera pose transformation follows. It transforms points from the local image's frame to the global frame. The transformation parameters are given as: tx ty tz qx qy qz qw, where t is the translation vector and q is a quaternion describing the rotation (as for example implemented in the Eigen linear algebra library).

The file rgb.txt is provided for compatibility with the TUM RGB-D benchmark. It lists all image files in the dataset. Each file is listed on a separate line, which is formatted like:

timestamp file_path

RGB-D data

The RGB-D video format follows that of the TUM RGB-D benchmark for compatibility reasons. In addition to the monocular data, downloading the RGB-D data adds the following files and folders:

+── depth

│ +── <timestamp1>.png

│ +── <timestamp2>.png

│ +── ...

+── associated.txt

+── depth.txt

The depth and color images in the corresponding folders have the same viewpoint and dimensions and are synchronized. Files having the same name were recorded at the same time.

Depth images in the depth folder are stored as 16-bit PNG files. A pixel's value must be divided by 5000 to obtain its depth value in meters. Pixels without a depth estimate have the value zero.

The files associated.txt and depth.txt are provided for compatibility with the TUM RGB-D benchmark. They list all image files in the dataset. In depth.txt, each corresponding file is listed on a separate line that is formatted like:

timestamp file_path

In associated.txt, corresponding pairs of depth and image files are listed in the format:

rgb_timestamp rgb_file_path depth_timestamp depth_file_path

For the ETH3D SLAM benchmark, it is not necessary to use these files. Corresponding depth and color images can be easily determined since they always have exactly the same filename.

RGB stereo data

In addition to the monocular data, downloading the stereo data adds the following files and folders:

+── rgb2

│ +── <timestamp1>.png

│ +── <timestamp2>.png

│ +── ...

+── calibration2.txt

+── extrinsics_1_2.txt

The color images of a second color camera are stored as PNG files in the rgb2 folder. As for the other images, these images are undistorted pinhole camera images. Please note that they are not stereo-rectified with the other images however, i.e., corresponding pixels in the images in general do not lie on the same image row.

calibration2.txt gives the calibration of the second color camera, analogous to calibration.txt. See the monocular data description for an explanation of the camera model.

The file extrinsics_1_2.txt specifies the 6-DOF rigid-body transformation between the camera "1" (corresponding to the images in the rgb and possibly depth folders), and the camera "2" (corresponding to the images in the rgb2 folder). This transformation T is given in this file as a 3x4 matrix: $$T_{00} ~~ T_{01} ~~ T_{02} ~~ T_{03}$$ $$T_{10} ~~ T_{11} ~~ T_{12} ~~ T_{13}$$ $$T_{20} ~~ T_{21} ~~ T_{22} ~~ T_{23}$$ A 3D point can be transformed from camera 2 to camera 1 by homogeneously multiplying it with this matrix from the right.

IMU data

Downloading the IMU data adds those additional files:

+── imu.txt (corresponding to rostopic /uvc_camera/cam_2/imu)

+── imu2.txt (corresponding to rostopic /uvc_camera/cam_3/imu)

+── imu3.txt (corresponding to rostopic /uvc_camera/cam_0/imu)

+── imu4.txt (corresponding to rostopic /uvc_camera/cam_1/imu)

+── sequence_calibration.txt (except for SfM datasets)

Each camera contains an IMU, and each IMU file thus contains the data of the IMU of a specific camera. The file imu.txt contains the data of the right RGB camera, which is the viewpoint that the rgb and depth data is provided in. The file imu2.txt contains the data of the left RGB camera, corresponding to the rgb2 folder. The files imu3.txt and imu4.txt contain the IMU data of the right and left infrared cameras (for these cameras, no images are provided, except in the raw data format).

In each file, a pair of gyroscope and accelerometer measurements is given on each line. The format of each line is as follows:

timestamp angular_velocity[rad/sec](x y z) linear_acceleration[m/s^2](x y z)

For datasets other than those with Structure-from-Motion ground truth (whose names start with sfm_), there is also a file sequence_calibration.txt, which specifies some values used in the dataset's calibration. In particular, the value for mocap_timescaling_camera specifies how the camera and IMU timestamps were scaled to match the timestamps of the motion capturing system. This value should always be very close to 1, however. For the Structure-from-Motion datasets, no scaling of timestamps was performed.

The IMU model on the cameras is the ICM20608 by Invensense. It may be calibrated with Kalibr using the following IMU configuration YAML file (adapted to the specific rostopic): imu.yaml. We provide a Kalibr camera calibration here which was computed from the calibration dataset for mocap datasets (2018-08-13-15-44-25-camera-calibration.bag) and can be used as input: camera_calib_radtan.zip. In the file camchain_color_only.yaml, the infrared cameras were removed from the camchain. This makes it possible to use it for IMU calibration with the "sequence calibration" subsets of the calibration sequences, in which the infrared projection is active. An IMU calibration obtained in this way is provided here: camera_imu_calib_radtan.zip. Please note that there would be several ways to improve upon that calibration (as we did in our own calibration program for the camera geometry): A better Debayering method might be used for the RGB images, a non-parametric camera model might be used to better fit the true camera distortion everywhere in the images, and better feature detection and tracking might be used to detect the checkerboard corners.

Raw data

Downloading the raw data for a dataset yields the following files:

+── calibration_dataset.txt (for training datasets)

+── raw_dataset.bag

+── sequence_calibration.txt

raw_dataset.bag is a compressed rosbag file containing the raw camera, IMU, and (for training datasets) ground truth data. With a ROS installation, it can be inspected with 'rosbag info raw_dataset.bag'. If desired, it can be uncompressed with 'rosbag decompress raw_dataset.bag'.

The text file calibration_dataset.txt contains the name of the calibration dataset which is associated with this dataset (one calibration dataset may be associated with several datasets). Since they contain additional views of the scene, calibration datasets are only provided for training datasets. Thus, for test datasets only self-calibration is possible. The format of calibration datasets is as follows for datasets with motion captured ground truth:

+── calibration.bag

+── seq_camera_refinement_1.txt

+── seq_sequence_calib_1.txt

+── seq_sequence_calib_2.txt

calibration.bag contains additional video data which can be used for dataset calibration. It consists of several parts: two sequence calibration subsequences (one recorded before the actual dataset(s) and one afterwards), and a camera refinement subsequence. For the camera refinement subsequence, the camera's infrared projection is off and the camera is moved very slowly. It is intended to refine the camera extrinsic parameters. For the sequence calibration subsequences, the camera's infrared projection is on and the camera is moved quickly. They are intended to compute the camera-mocap calibration. See the supplemental material of our 'BAD SLAM' paper for more details.

The text files in the archive specify the timestamp ranges of the different subsequences. The first number is the first timestamp in the subsequence, the second number is the last timestamp in it.

If you perform a custom calibration, keep in mind that our processing includes an alignment step between the motion-capturing timestamps and the image timestamps. The image and IMU timestamps in the processed datasets are therefore transformed. The results should be reported in the motion-capturing timestamp frame. The image and IMU timestamps can be transformed with the values from the file sequence_calibration.txt from the dataset files as follows:

mocap_timestamp = (mocap_timescaling_camera * ((1.e-9 * camera_timestamp_nanoseconds) - timescaling_anchor)) + timescaling_anchor + mocap_timeoffset_camera

For datasets with Structure-from-Motion ground truth, the format of the calibration datasets is as follows:

+── calibration.bag

+── seq_camera_refinement_1.txt

+── seq_camera_refinement_2.txt

+── seq_sfm_1.txt

The 'sfm' subsequence is the part of the dataset on which Structure-from-Motion was run to determine the ground truth poses. This should be longer than the actual dataset subsequence.

Evaluation

The benchmark evaluates commonly used absolute and relative trajectory errors. It is possible to evaluate both training and test set results on this website by uploading the results as described below. For evaluating training results locally, the evaluation program is provided as source code (on GitHub). This program depends on Eigen. Using CMake, it should compile for Linux and Windows. A script to automate the evaluation on all datasets and output comparison plots for multiple methods is provided here: evaluate_eth3d_slam_results.py. It works together with the run script that is also referenced below in the section on result submission: run_on_eth3d_slam_datasets.py.

Result submission

Important: Please note that we are interested in the methods' runtimes and request these as mandatory information for each submission. Therefore, please remember to record the runtime of your method when you submit, on each dataset individually.

To submit your results, you need to sign up for an account and login.

Both the training and test datasets can be evaluated online. Partial submissions (on a subset of datasets) are possible, but we only compute category averages (and therefore rank a method in a category) if results for all of the datasets within a category are provided. If a method fails on a dataset and you would still like to rank it in a category containing this dataset, you can provide an empty result file for this dataset.

Submission format

Results for a method must be uploaded as a single .zip or .7z file (7z is preferred due to smaller file sizes), which must in its root contain a folder named slam with the results. There must not be any extraneous files or folders in the archive, or the submission will be rejected. Upper- / lowercase spelling matters for all directories and filenames.

For a given dataset, the trajectory estimated by the SLAM algorithm must be provided as a text file with the same name as the dataset, with file extension .txt. For example, for the dataset "mannequin_1", the result file must be named "mannequin_1.txt". Each line in the result file specifies the camera pose for one image. Lines must be ordered by increasing timestamp. The format of each line is the same as the format of the ground truth data:

timestamp tx ty tz qx qy qz qw

Each camera pose is specified as the transformation from the local camera frame to the global frame. You can choose an arbitrary global frame, for example, by setting the first frame's pose to the identity. The transformation parameters are given as: tx ty tz qx qy qz qw, where t is the translation vector and q is a quaternion describing the rotation (as for example implemented in the Eigen library).

Poses should be given for all input images. If a pose for an image is missing, it will be filled in automatically during evaluation based on the provided poses.

In addition to the trajectory, for each result file a metadata file having the same name, but with extension _runtime.txt, must be provided. For example, for the dataset "mannequin_1" with result file "mannequin_1.txt", the name of this additional file should be "mannequin_1_runtime.txt". This file is required to contain the method's runtime for this dataset in seconds. The format is a single floating-point number as plain text.

In sum, the file structure of a submission looks like this:

+── slam

│ +── mannequin_1.txt

│ +── mannequin_1_runtime.txt

│ +── dino.txt

│ +── dino_runtime.txt

│ +── ...

As an example, a Python script to run BAD SLAM on the dataset is provided here: run_on_eth3d_slam_datasets.py. A second script to zip the results in the correct format for uploading is provided here: zip_eth3d_results.py. These scripts can be easily adapted to run other SLAM methods.